The brain is the most complicated object in the universe. Can researchers solve its greatest mysteries?

In the middle of 2023, a study conducted by the HuthLab at the University of Texas sent shockwaves through the realms of neuroscience and technology. For the first time, the thoughts and impressions of people unable to communicate with the outside world were translated into continuous natural language, using a combination of artificial intelligence (AI) and brain imaging technology.

This is the closest science has yet come to reading someone’s mind. While advances in neuroimaging over the past two decades have enabled non-responsive and minimally conscious patients to control a computer cursor with their brain, HuthLab’s research is a significant step closer towards accessing people’s actual thoughts. As Alexander Huth, the neuroscientist who co-led the research, told the New York Times: “This isn’t just a language stimulus. We’re getting at meaning – something about the idea of what’s happening. And the fact that’s possible is very exciting.”

Combining AI and brain-scanning technology, the team created a non-invasive brain decoder capable of reconstructing continuous natural language among people otherwise unable to communicate with the outside world. The development of such technology – and the parallel development of brain-controlled motor prosthetics that enable paralyzed patients to achieve some renewed mobility – holds tremendous prospects for people suffering from neurological diseases including locked-in syndrome and quadriplegia.

In the longer term, this could lead to wider public applications such as Fitbit-style health monitors for the brain and brain-controlled smartphones. On January 29, Elon Musk announced that his Neuralink tech startup had implanted a chip in a human brain for the first time. He had previously told followers that Neuralink’s first product, Telepathy, would one day allow people to control their phones or computers “just by thinking”.

But alongside such technological developments come major ethical and legal concerns. It’s not only privacy but the very identity of people that may be at risk. As we enter this new era of so-called mind-reading technology, we will also need to consider how to prevent its potential to help people being outweighed by its potential to do harm.

Humanity’s greatest mapping challenge

The brain is the most complicated object in the universe. It contains more than 89 million neurons, each connected to around 7,000 other neurons that send between 10 and 100 signals every second. The development of AI was based on the brain and the concept of neurons working together. Now, the way AI works with deep learning is helping us understand much more clearly how the brain works.

By fully mapping the structure and function of a healthy human brain, we can determine with great precision what goes awry in diseases of the brain and mind. In 2009, the Human Connectome Project was launched by the US National Institute of Health with the goal of building a map of the structure and function of a healthy human brain. Similar initiatives were launched in Europe in 2013 (the Human Brain Project) and China in 2016 (the China Brain Project).

This daunting endeavor may still take generations to complete – but the scientific ambition of mapping and reading people’s brains dates back more than two centuries. With the world having been circumnavigated many times over, Antarctica discovered and much of the planet charted, humanity was ready for a new (and even more complicated) mapping challenge – the human brain.

These efforts began in earnest in the late 18th century with the development of a systematic framework for scientists to ask how the brain and its regions produce psychological experiences – our thoughts, feelings and behavior. One of the earliest attempts was phrenology, pioneered by the Austrian physician and anatomist Franz Joseph Gall.

While this long-discredited science may now be best known for the decorative busts sold in flea markets, it was all the rage by the early 19th century. Gall and his assistant Johann Spurzheim suggested that the brain was organized along 35 psychological functions, each linked to a different underlying region.

Just as you might start lifting dumbbells if you want larger biceps, phrenology argued that the more you use a particular psychological function, the more the brain region underlying it should grow – leading to a corresponding lump in your skull. According to Gall and Spurzheim, some of these functions (including memory, love of offspring and the instinct to kill) were shared with animals, whereas others (such as wit, poetic ability and morality) were uniquely human.

Throughout the British Empire and later in the U.S., phrenology was used to justify classism, colonialism, slavery and white supremacy. Queen Victoria had readings done on her children, but Napoleon Bonaparte was not a fan. When Gall moved to Paris in 1807 to perform much of his phrenological theorizing, France’s emperor pronounced: “It is an ingenious fable which might seduce the gens du monde, but could not stand the scrutiny of the anatomist.”

In the 1860s, “locationist” views of how the brain worked made a comeback – though the scientists leading this research were keen to distinguish their theories from phrenology. French anatomist Paul Broca discovered a region of the left hemisphere responsible for producing speech – thanks in part to his patient, Louis Victor Leborgne, who at age 30 lost the ability to say anything other than the syllable “tan.” Today, Patient Tan remains one of psychology’s most famous case studies, and Broca’s area, in the frontal cortex, is one of the most important language regions of the brain, playing a critical part in putting our thoughts into words.

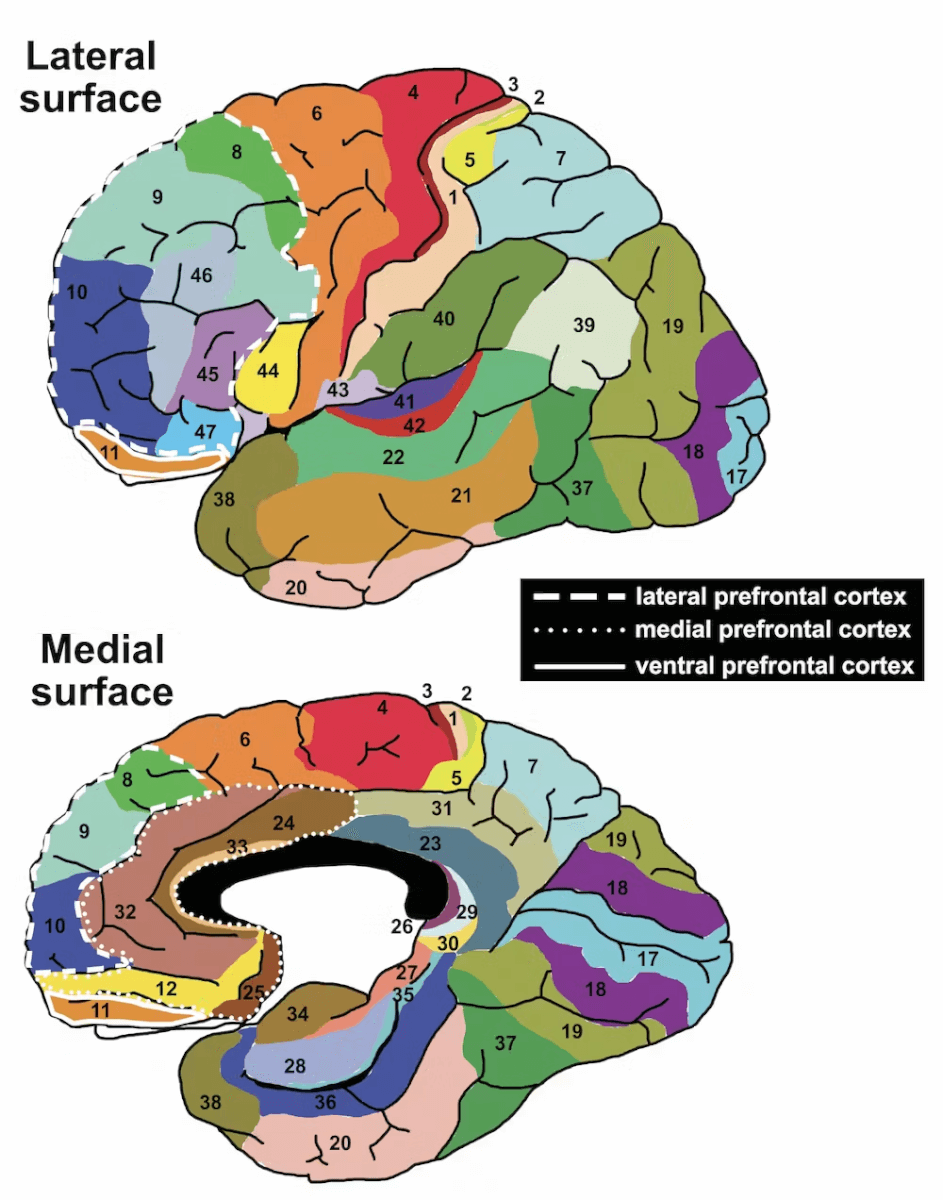

Similarly, German neuroanatomist Korbinian Brodmann’s map of 52 distinct regions of the cerebral cortex, first published in 1909, is still an important tool of contemporary neuroscience – and today’s neuroscientists continue to ask some of the same questions as these pioneers: are our thoughts, feelings and behavior produced by the collective action of the brain, or specific brain regions.

In modern neuroscience studies, hi-tech scanning tools such as positron emission tomography (PET) and functional magnetic resonance imaging (fMRI) allow researchers to map the brain by measuring changes in local blood flow that are linked to changes in local neural activity. This approach depends on the findings of American physiologist John Fulton almost a century ago. Fulton was treating Walter K, a 26-year-old sailor suffering from headaches and vision failure. When using his eyes after leaving a dark room, the patient sensed a noise in the back of his head, located over the visual cortex. This stronger pulse of activity was not replicated by other sensory inputs, for example when smelling tobacco or vanilla.

Over the remainder of the 20th century, this first observation of the link between local cerebral blood flow and brain function was built on by neuroscientists including American Seymour Kety and Swedish collaborators David Ingvar and Neils Lassen. Their pioneering work paved the way for modern brain mapping, led by the ground-breaking work of BrainGate – a multidisciplinary research unit originating in the neuroscience department at Brown University in the US state of Rhode Island.

Prototype brain-computer interfaces (BCIs) record and decode a patient’s brain activity, translating it into actions that can be carried out by a neural cursor, prosthetic limb or powered exoskeleton. The ultimate goal is wireless, non-invasive devices that help patients communicate and move with precision in the real world. AI is critical to this goal, and is already being used to help BCI systems produce finely controlled, rapid motor movements.

In 2004, BrainGate began the first clinical trial using BCIs to enable patients with impaired motor systems (including spinal cord injuries, brainstem infarctions, locked-in syndrome and muscular dystrophy) control a computer cursor with their thoughts.

Patient MN, a quadriplegic since being stabbed in the neck in 2001, was the trial’s first patient. After neuroscientist Leigh Hochberg’s team implanted electrodes over the hand-arm region of the patient’s primary motor cortex, they reported that Patient MN was able to open emails, draw figures using a paint program, and operate a television using a cursor. In addition, brain activity was linked to the patient’s prosthetic hand and robotic arm, enabling rudimentary actions including grasping and transporting an object. What’s more, these tasks could be done while the patient was having a conversation, suggesting they did not even demand the full concentration of the patient.

Other quadriplegic patients subsequently used BCI devices connected to multi-joint robotic arms to pick up and drink from a cup – and in 2015, a patient with locked-in syndrome was shown operating a point-and-click keyboard five years after the device’s implantation. Advanced decoding algorithms saw their cursor control improve such that patients went from typing 24 characters per minute in 2015 to 39 characters per minute two years later.

Also in 2017, BrainGate clinical trials reported the first evidence that BCIs could be used to help patients regain movement of their own limbs by bypassing the damaged portion of the spinal cord. One patient with a high-cervical spinal cord injury was able to reach and grasp a cup eight years after sustaining his injury.

Then in 2021, the Braingate team reported that quadriplegic patients were now using a wireless system in their own homes to control a tablet computer – an important first step toward a future where BCI devices can help people move and communicate outside the confines of the hospital or laboratory. Furthermore, the researchers said they anticipate “significant advances and paradigm shifts in neural signal processing, decoding algorithms and control frameworks” in the quest to make such devices available to the wider public.

Beyond Braingate’s successes, another team led by American neurosurgeon Edward Chang recently reported using surgically implanted electrocorticogram electrodes to create a “digital avatar” that could convey what a paralyzed patient wants to say. With the help of AI, the BCI decoded muscle movements related to speech the patients were thinking in their minds (as opposed to decoding the actual semantic content).

Activity patterns emerging from the specific brain region that is critical for speech are the key focus for this type of BCI. One expert not involved in the research told the Guardian: “This is quite a jump from previous results. We’re at a tipping point.”

A new era of ‘mind reading’ technology

Brain activity has long been recorded by non-invasive imaging methods such as fMRI and electroencephalography (EEG). But having been primarily envisaged as a tool for diagnostics and monitoring, it is now also a core element of the latest neural communication and prosthetic devices.

A landmark moment came in 2012, when a team led by Canada-based neuroscientist Adrian Owen used neuroimaging to establish a line of communication with people suffering from disorders of consciousness. Despite being behaviourally non-responsive and minimally conscious, these patients were able to answer yes-or-no questions just by using their minds. For patients unable to communicate via facial or eye movements (methods that had been available to locked-in patients for many years), this was a very promising evolution.

Now, a decade on, the HuthLab research at the University of Texas constitutes a paradigmatic shift in the evolution of communication-enabling neuroimaging systems.

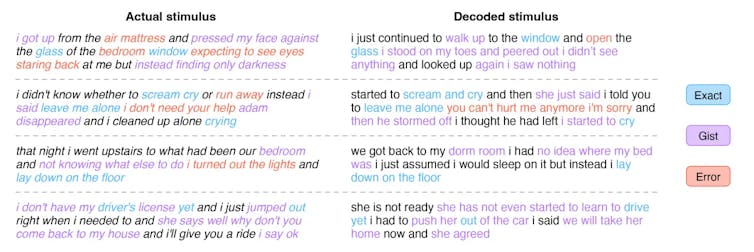

In the study’s first stage, participants were placed in an fMRI scanner and their brain activity was recorded while they listened to 16 hours of podcasts (the model training dataset consisted of 82 five to 15-minute stories taken from the Moth Radio Hour and Modern Love). This brain activity data was then linked to audio fragments the participants were listening to, in order to map what their brain activity patterns looked like when they had specific semantic content in their minds.

Next, the same participants were exposed to new audio fragments they had never heard before, or alternatively were asked to imagine a story. The decoder was then applied to this new set of brain activity data, to “reconstruct” the stories the participants had been listening to or imagining – with some striking results. For instance, a patient was played this audio: “I don’t have my driver’s license yet and I just jumped out right when I needed to, and she says: ‘Well, why don’t you come back to my house and I’ll give you a ride?’ I say OK,”

The decoder reconstructed it as: “She is not ready – she has not even started to learn to drive, yet I had to push her out of the car. I said: ‘We will take her home now’ and she agreed.”

While there were also a considerable number of mistakes over the entirety of the trial, the reconstruction of continuous language solely on the base of brain activity patterns, including some exact word matches, is arguably the closest we have yet come to truly reading someone’s thoughts.

Whereas the brain’s capacity to produce motor intentions is shared across species, the ability to produce and perceive language is uniquely human. Thus, decoding actual semantic content from brain activity in regions used in language perception (primarily the association and prefrontal regions of the brain’s cortex) seems more fundamental to what makes us human.

Also, the HuthLab study used non-invasive fMRI technology – a form of neuroimaging that measures oxygen levels of blood in the brain in order to make inferences on brain activity. The disadvantage of fMRI is that it can only take slow measurements of brain signals (typically, one brain volume every two or three seconds). The study overcame this by using generative AI language models (akin to ChatGPT) that predict the probability of word sequences, and thus what words are most likely to come next in someone’s thoughts.

The researchers also worked with patients watching silent short film clips. They demonstrated that the system could be used not only to decode semantic content entertained through auditive perception, but also through visual perception.

Importantly, they also explicitly addressed the potential threat to a person’s mental privacy posed by this kind of technology. Jerry Tang, one of the study’s lead researchers, stated:

We take very seriously the concerns that it could be used for bad purposes and have worked to avoid that. We want to make sure people only use these types of technologies when they want to and that it helps them.

The very fact this semantic decoder has to be trained on each person separately, with their cooperation over a long period of time, constitutes a robust safeguard. In other words, one of the major hurdles in the development of language decoders – the fact they are not universally applicable – constitutes one of the strongest safeguards against privacy violations.

However, while there is no risk of a malevolent company being able to read the thoughts of a random person in the street any time soon, there are nonetheless important ethical, legal and data protection concerns that must be considered as this technology develops.

We have already seen the consequences of unfettered corporate access to personal data and online behavior. Although we are a long way off from neural data being collected and processed at such scale, it is important to consider burgeoning ethical questions in the early stages of technological progress.

The ethical implications are immense

Losing the ability to communicate is a deep cut to one’s sense of self. Restoring this ability gives the patient greater control over their lives and their ability to navigate the world – but it could also give other entities, such as corporations, researchers and other third parties, an uncomfortable degree of insight into, or even control over, the lives of patients.

Even other types of intimate biological data, such as that about our genomes or our biometrics, do not come as close to approximating our private inner lives as neural data. The ethical implications of providing access to such data to scientific and corporate entities are potentially immense.

This is reflected in Resolution 51/3 of the UN Human Rights Council, which commissioned a study on “the impact, opportunities and challenges of neurotechnology with regard to the promotion and protection of all human rights” in time for the council’s 57th session in September 2024. However, whether the introduction of novel human rights is warranted to address the challenges posed by neurotechnology remains a hotly debated issue among human rights experts and advocacy groups.

The NeuroRights Foundation, based at Columbia University in New York, argues that novel rights surrounding neurotechnologies will be needed for all humans to preserve their privacy, identity, and free will. The potential vulnerability of disabled patients makes this a particularly important problem. For example, Parkinson’s disease, a neurodegenerative disease that affects movement, is co-morbid with dementia, which affects the ability to reason and think clearly.

In line with this approach, Chile was the first country that adopted legislation to address the risks inherent to neurotechnology. It not only introduced a new constitutional right to mental integrity, but is also in the process of adopting a bill that bans selling neurodata, and subjects all neurotech devices to be regulated as medical devices, even those intended for the general consumer. The proposed legislation recognises the intensely personal nature of neural data and considers it akin to organ tissue which cannot be bought or sold, only donated. But this legislation has also faced criticism, with legal scholars questioning the need for new rights and pointing out that this regime could stifle beneficial BCI research for disabled patients.

While the legal action taken by Chile is the most impactful and far-reaching to date, other countries are considering following suit by updating existing laws to address the developments in neurotechnologies.

One of the cornerstones of ethical research is the principle of informed consent. Particular attention must be paid to the capacity of paralyzed patients and their family members to understand and consent to novel experimental therapies. Patients with a very limited ability to communicate may not be able to answer more extensive questions associated with the obtaining of informed consent, which is often more complex than a simple opt-in procedure. Also, not all potential risks and side-effects (both physical and mental) can be foreseen, making it difficult for physicians to adequately inform their patients.

At the same time, it is important to keep in mind that denying treatment to a patient whose only hope may be communicating through BCI presents a significant opportunity cost, such as a lifetime without communication, that may be very well greater than the costs of participation in experimental treatments. The appropriate balance to strike for clinicians and researchers will be challenging to determine.

In a burgeoning new era of big (brain) data, longstanding ethical concerns about the hacking, leaking, unauthorized use or commercial exploitation of personal data will be amplified in the case of sensitive data on a person’s thoughts or movements (as controlled through neuroprosthetics). Paralysed patients may be particularly vulnerable to neurodata theft given their reliance on caregivers, and increasingly, the BCI technologies themselves, to communicate and move around the world. Care must be taken to ensure that information disclosed by a BCI represents a patient’s true and consensual thoughts.

And while it is likely that the first advances in neurotech will be therapeutic in nature, such as for disabled and neurodivergent patients, future advances are likely to involve consumer applications such as entertainment, as well as for military and security purposes. The growing availability of neurotechnology in a commercial context that is generally subject to far less regulation only amplifies these ethical and legal concerns.

Data protection laws should be assessed on their ability to account for the new risks arising from increasing access to and collection of neurodata by organizations and entities of different types. Take the example – for the time being completely hypothetical – of using BCI to infer the thoughts of suspects in police interrogations.

One might say that BCI cannot be used in police interrogations as the error rate of misinterpreting a person’s neural data is currently unacceptably high, although accuracy could improve in the future. Or, one might say that BCI should never be used to “read” a person’s brain without their consent, regardless of the technology’s accuracy. Or, one might say that using BCI for interrogations is justified under certain extreme circumstances, such as when crucial information is needed to save someone’s life, and the suspect is refusing to cooperate.

Different people, societies, and cultures will disagree on where to draw the line. We are at an early stage of technological development and as we begin to uncover the great potential of BCI, both for therapeutic applications and beyond, the need to consider these ethical questions and their implications for legal action becomes more pressing.

Decoding our neuro future

This is a groundbreaking moment in our quest to understand the inner workings of our brains and minds. In the past year alone, neuroscientists have reversed spinal disabilities, translated MRI data into text to understand what someone is thinking, and begun to conduct clinical trials to help people interact with objects using thoughts alone, something already seen in trials with monkeys two years ago. Such developments could all lead to transformative impacts on people’s lives.

At the same time, it’s important to note that research such as the HuthLab study uses a very small sample, and that the training process for its semantic decoder is complex, time-consuming and expensive. Add to this the fact that fMRI, although non-invasive, is a non-wearable neuro-imaging technique, and it is clear these methods are not set to leave a strictly organized laboratory setting any time soon.

However, the HuthLab researchers suggest that in time, fMRI could be replaced by functional near-infrared spectroscopy (fNRIS) which, by “measuring where there’s more or less blood flow in the brain at different points in time”, could give similar results to fMRI using a wearable device.

Certainly, the exponential global investment in the development of neurotechnologies such as this, by governments and private actors alike, shows that the world is eager to create accessible BCIs that are suited to function as medical devices, but also as commercial consumer goods. By the middle of 2021, the total investment in neurotechnology companies amounted to just over $33 billion.

One of the most high-profile companies is Musk’s Neuralink. “Initial results show promising neuron spike detection,” Musk tweeted on January 29, of his neurotech startup’s first implanted chip in a human brain. The implant is said to include 1,024 electrodes, yet is only slightly larger than the diameter of a red blood cell. According to Neuralink: “Its small size allows threads to be inserted with minimal damage to the [brain] cortex.”

While this wireless implant is currently being developed as a medical device, aiming at enhancing the quality of life for patients suffering from various neurological diseases (Neuralink’s clinical trial has enlisted people aged 22 and above living with quadriplegia), Musk stated on X-Twitter that the ultimate aim is to create a device that “enables control of your phone or computer, and through them almost any device, just by thinking”.

Indeed, commercial neuro-imaging devices are already on the market. The Kernel Flow, for example, is a commercially available, wearable headset that uses fNRIS technology to monitor brain activity. Another prominent player in commercial neuro-imaging, Emotiv, has developed earpods incorporating EEG technology that are able to monitor brain activity for signs of focus, attention and stress – with the stated ambition of boosting the wearer’s productivity at work.

While the era of big data has enabled increasingly personalized and complex approximations of people’s inner lives through our biometrics, genetics and online presence, nothing has been so powerful as to capture the inner workings of our minds – yet.

But as HuthLab’s research suggests, and Musk’s pronouncements claim, this may now not be so very far away. The dawn of a new era of brain-computer interfaces should be treated with great care and great respect – in acknowledgment of its immense potential to both help, and harm, our future generations.

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Sourced from Study Finds

The Conversation is a nonprofit news organization dedicated to unlocking the knowledge of academic experts for the public. The Conversation’s team of 21 editors works with researchers to help them explain their work clearly and without jargon.

Top image: Truthstream Media

Become a Patron!

Or support us at SubscribeStar

Donate cryptocurrency HERE

Subscribe to Activist Post for truth, peace, and freedom news. Follow us on SoMee, Telegram, HIVE, Minds, MeWe, Twitter – X, Gab, and What Really Happened.

Provide, Protect and Profit from what’s coming! Get a free issue of Counter Markets today.